Reliability Tests

We present the testing results to show the template verification reliability evaluations for the MegaMatcher ID system. The tests are available below for each supported biometric modality:

- Face template verification reliability evaluation

- Voice template verification reliability evaluation

- Slap (contactless fingerprints) template verification reliability evaluation

The face liveness check algorithm was tested by iBeta and certified as compliant with ISO 30107-3 Biometric Presentation Attack Detection Standards.

Face template verification reliability evaluation

University of Massachusetts Labeled Faces in the Wild (LFW) public dataset was used for testing the face template verification reliability of Neurotechnology MegaMatcher ID system. The following modifications were applied to the dataset:

- According to the original protocol, only 6,000 pairs (3,000 genuine and 3,000 impostor) should be used to report the results. But recent algorithms are "very close to the maximum achievable by a perfect classifier" [source]. Instead, as Neurotechnology algorithms were not trained on any image from this dataset, verification results on matching each pair of all 13,233 face images of 5,729 persons were chosen to be reported.

- All identity mistakes, which had been mentioned on the LFW website, were fixed. Also, several not mentioned issues were fixed.

- Some images from the LFW dataset contained multiple faces. The correct faces for assigned identities were chosen manually to solve these ambiguities.

Three experiments were performed with each dataset:

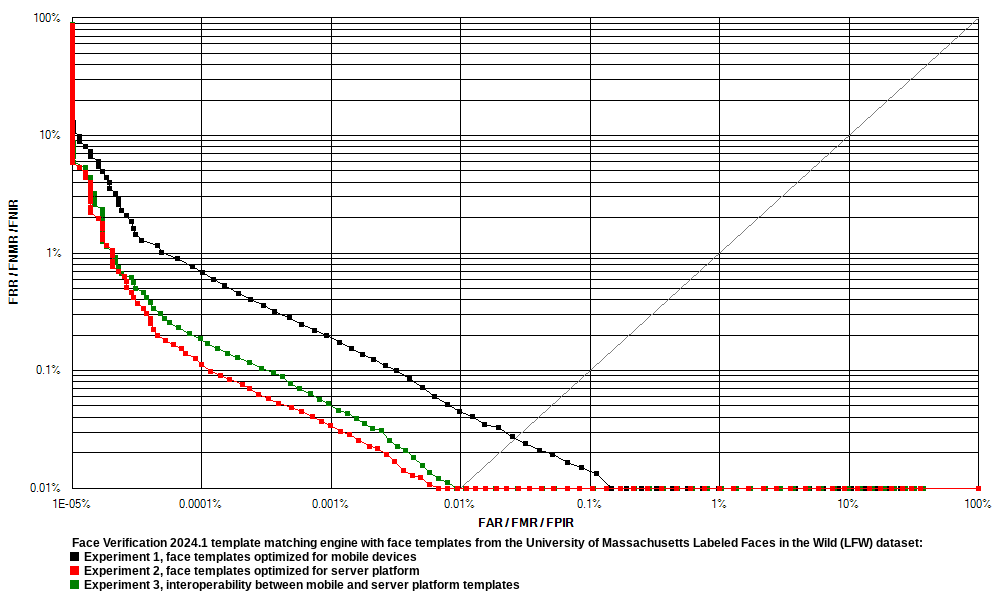

- Experiment 1 used face templates which were extracted with settings for mobile device usage optimization. The MegaMatcher ID 2025.1 algorithm reliability in this experiment is shown on the ROC chart as black curve.

- Experiment 2 used face templates which were extracted with settings for server platform usage optimization. The MegaMatcher ID 2025.1 algorithm reliability in this experiment is shown on the ROC chart as red curve.

- Experiment 3 used face templates from Experiments 1 and 2 to test interoperability between them. The MegaMatcher ID 2025.1 algorithm reliability in this experiment is shown on the ROC chart as green curve.

Receiver operation characteristic (ROC) curves are usually used to demonstrate the recognition quality of an algorithm. ROC curves show the dependence of false rejection rate (FRR) on the false acceptance rate (FAR). Equal error rate (EER) is the rate at which both FAR and FRR are equal.

| MegaMatcher ID 2025.1 face template verification algorithm reliability testing results with face images from LFW dataset | |||

|---|---|---|---|

| Experiment 1 | Experiment 2 | Experiment 3 | |

| FRR at 0.1 % FAR | 0.0149 % | 0.0029 % | 0.0025 % |

| FRR at 0.01 % FAR | 0.0446 % | 0.0078 % | 0.0099 % |

| FRR at 0.001 % FAR | 0.1985 % | 0.0339 % | 0.0520 % |

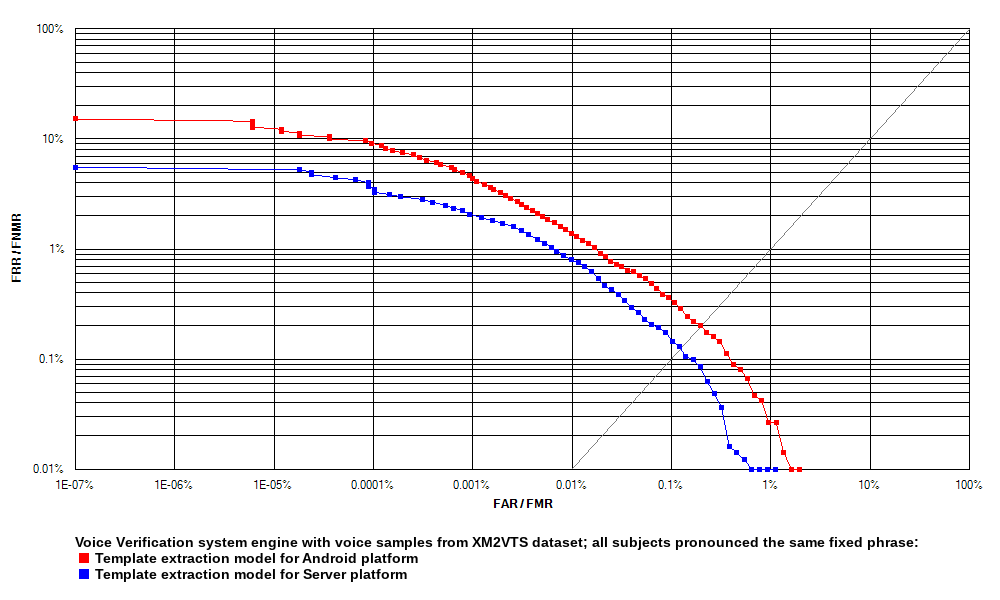

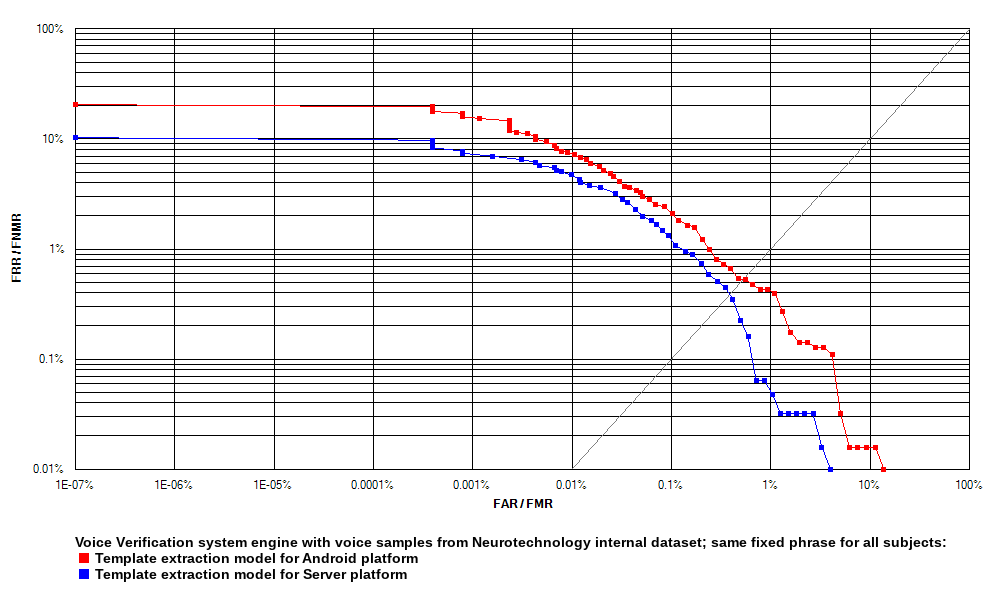

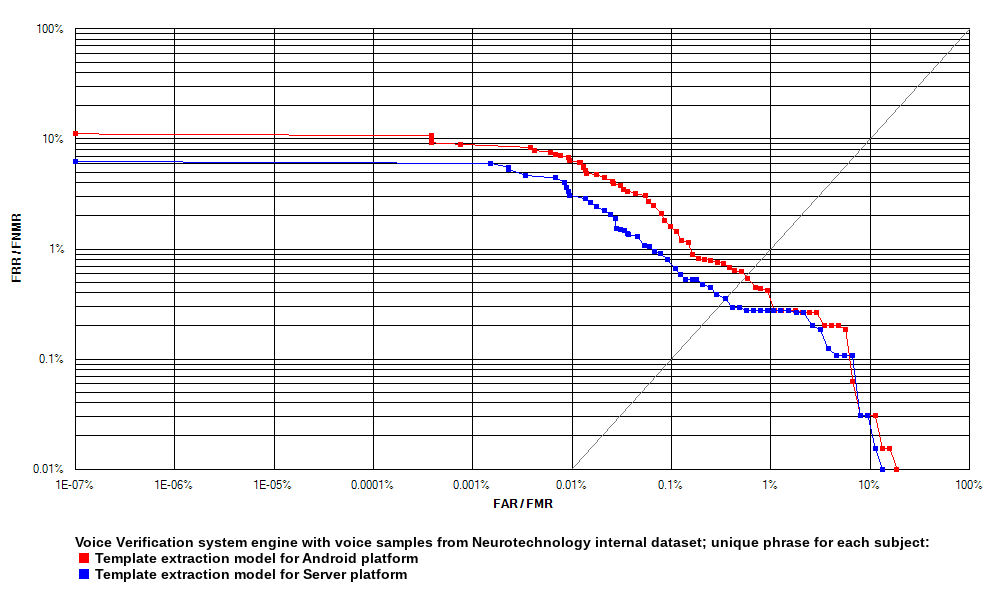

Voice template verification reliability evaluation

The MegaMatcher ID 2025.1 voice template matching algorithm has been tested with voice samples taken from the XM2VTS Database, as well as with voice samples from Neurotechnology's internal dataset. Two datasets included fixed phrases pronounced by all subjects within a particular dataset, and one dataset included unique phrases for each subject.

Gallery and probe were populated in this way:

- Gallery – each voice sample from each source dataset was truncated to 9 seconds by removing excessive part at the end.

-

Probe – each voice sample from each source dataset was processed in this way:

- Each voice sample was truncated to 9 seconds by removing excessive part at the end and added to the probe

- The truncated 9-second samples were cut into three 3-second samples, thus adding three additional samples out of each original sample to the probe.

- The truncated 9-second samples were truncated again to 6 seconds by removing excessive part at the end and added to the probe.

- The truncated 9-second samples were truncated again to 6 seconds by removing excessive part at the beginning and added to the probe.

| Voiceprint datasets used for MegaMatcher ID 2025.1 system voice engine testing | |||

|---|---|---|---|

| Experiment 1 | Experiment 2 | Experiment 3 | |

| Source data | XM2VTS (phrase 1) |

Neurotechnology internal dataset 1 | Neurotechnology internal dataset 2 |

| Fixed/unique phrase | Fixed | Fixed | Unique |

| Subjects in the dataset | 295 | 42 | 42 |

| Recording sessions per subject | 8 | 1 - 10 | 1 - 10 |

| Total gallery size (voice samples) | 2,360 | 305 | 309 |

| Total probe size (voice samples) | 14,160 | 1,830 | 1,854 |

| Total number of template comparisons | 16,687,566 | 261,960 | 269,100 |

Two tests were performed during each experiment:

- Test 1 used compact template extraction model, designed for Android platform. The reliability of matching the extracted templates is shown as red curves on the ROC charts.

- Test 2 used large template extraction model, designed for Server platform. The reliability of matching the extracted templates is shown as blue curves on the ROC charts.

Receiver operation characteristic (ROC) curves are usually used to demonstrate the recognition quality of an algorithm. ROC curves show the dependence of false rejection rate (FRR) on the false acceptance rate (FAR). Charts with ROC curves for each of the experiments are available above.

| MegaMatcher ID 2025.1 system's voice engine reliability tests | ||||||

|---|---|---|---|---|---|---|

| Exp. 1 | Exp. 2 | Exp. 3 | ||||

| Test 1 | Test 2 | Test 1 | Test 2 | Test 1 | Test 2 | |

| EER | 0.1982 % | 0.1246 % | 0.5041 % | 0.3971 % | 0.5643 % | 0.3516 % |

| FRR at 1 % FAR | 0.0263 % | 0.0040 % | 0.4282 % | 0.0634 % | 0.4174 % | 0.2783 % |

| FRR at 0.1 % FAR | 0.3595 % | 0.1757 % | 2.4100 % | 1.3160 % | 1.5920 % | 0.8040 % |

| FRR at 0.01 % FAR | 1.3890 % | 0.8037 % | 7.4850 % | 4.7100 % | 6.3080 % | 3.0770 % |

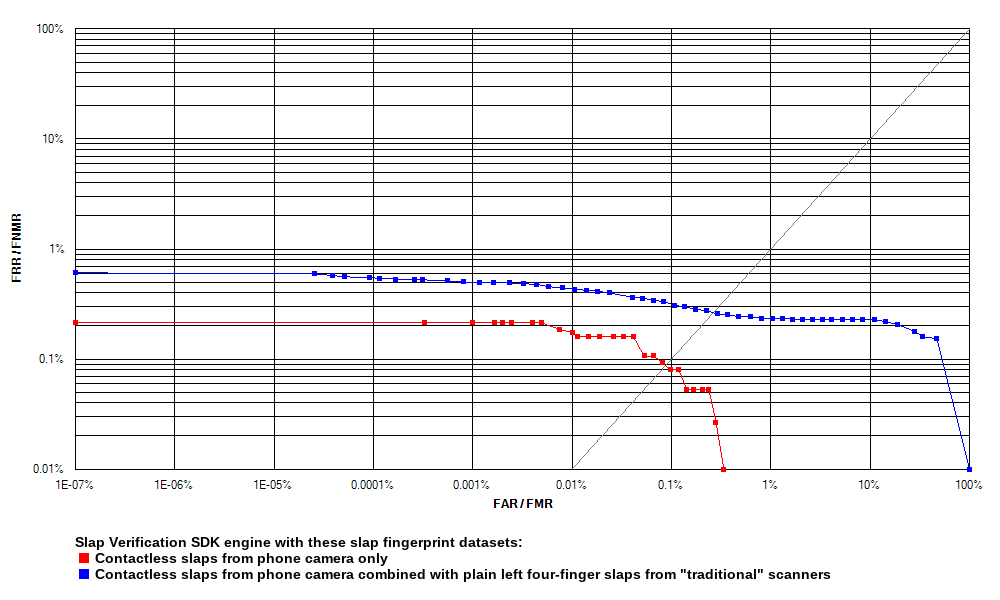

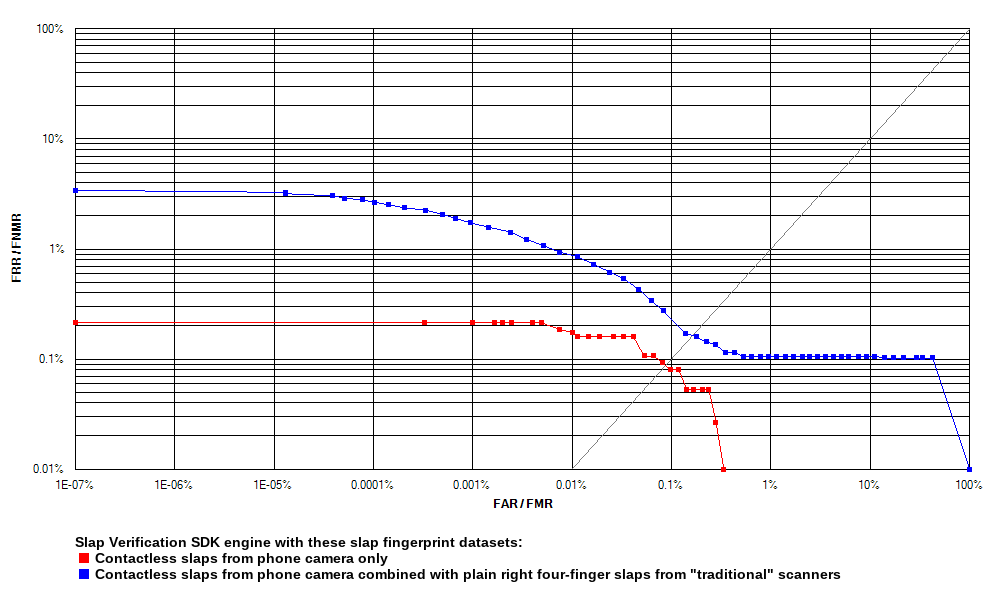

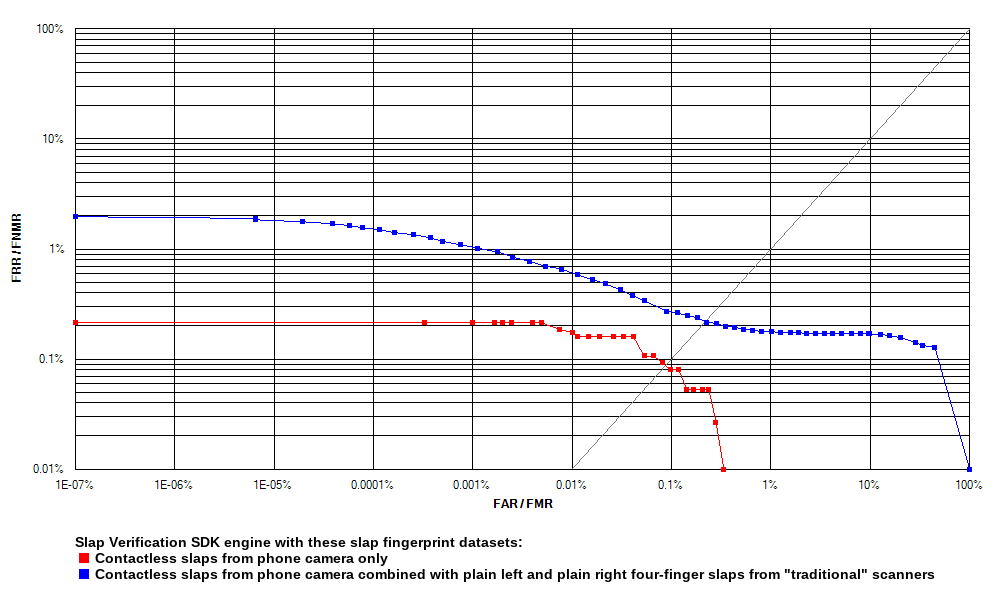

Slap template verification reliability evaluation

The MegaMatcher ID 2025.1 system's slap biometric engine has been tested using three Neurotechnology internal datasets, captured with different devices. All datasets contained biometric data belonging to the same persons, so one dataset could be used as a gallery, and another as a probe. These datasets were used:

- Dataset 1 – four-finger slaps from both left and right hands, captured in a contactless way with Google Puxel 4a (5G) smartphone camera as photos.

- Dataset 2 – left hand four-finger slaps, captured in a contact way with several different fingerprint readers and saved as images

- Dataset 3 – right hand four-finger slaps, captured in a contact way with several different fingerprint readers and saved as images

Four experiments were performed during rhe testing:

- Experiment 1 – all slaps from the Dataset 1 were compared between each other.

- Experiment 2 – the Dataset 1 was used as probe, and the Dataset 2 was used as gallery.

- Experiment 3 – the Dataset 1 was used as probe, and the Dataset 3 was used as gallery.

- Experiment 4 – the Dataset 1 was used as probe, and both Dataset 2 and Dataset 3 were used as gallery.

Receiver operation characteristic (ROC) curves are usually used to demonstrate the recognition quality of an algorithm. ROC curves show the dependence of false rejection rate (FRR) on the false acceptance rate (FAR). The charts below compare the Experiment 1 results with the results of other experiments.

| MegaMatcher ID 2025.1 system's slap engine reliability tests | ||||||

|---|---|---|---|---|---|---|

| Experiment 1 | Experiment 2 | Experiment 3 | Experiment 4 | |||

| Genuine pairs | 7,506 | 26,859 | 24,469 | 51,328 | ||

| Impostor pairs | 607,934 | 7,732,866 | 7,732,116 | 15,464,982 | ||

| EER | 0.0869 % | 0.2496 % | 0.1548 % | 0.2095 % | ||

| FRR at 1 % FAR | 0.0000 % | 0.2346 % | 0.1063 % | 0.1773 % | ||

| FRR at 0.1 % FAR | 0.0799 % | 0.3314 % | 0.2738 % | 0.2728 % | ||

| FRR at 0.01 % FAR | 0.1865 % | 0.4431 % | 0.9359 % | 0.6566 % | ||